In today’s always-on world of consumer research, the speed of insights is everything. But speed without accuracy is dangerous, especially when your data is under attack. The rise of research panel bots has introduced a new layer of risk for insights teams, flooding studies with fraudulent responses and putting the quality of decision-making in jeopardy.

This blog breaks down what’s really happening with research panel bots, what’s at stake for your brand, and how to protect your insights with better methodologies, smarter detection, and built-in defense. We’ll cover:

- The rise of research panel bots and what that means for data quality

- Why basic security checks aren’t cutting it anymore

- How Suzy’s layered approach and proprietary technologies like Biotic make all the difference

- The methodologies you should be using to fight back

The Data Quality Crisis in Consumer Research

It’s an open secret in the insights industry that a significant chunk of online survey data is unreliable. One Pew Research study found 84% of bogus respondents passed trap questions and 87% evaded speed checks.

However there is a ray of hope. One of the most effective indicators that a response may be fraudulent or AI-generated is its perfection. Human behavior, especially in open-ended feedback and qualitative responses, is inherently inconsistent, emotional, and sometimes contradictory. Bots, on the other hand, often overcorrect—producing polished, hyper-consistent answers that are too smooth to be true. This "standardization of perfection" becomes a red flag. Real people make mistakes. They hesitate. They change their minds. And that natural variability becomes a powerful asset in spotting bad actors.

Impact Across Key Consumer Industries

The stakes are high because billions of dollars in decisions are guided by consumer research data each year. One industry estimate suggests that about $1 trillion worth of business and advertising decisions are based on survey-based insights annually (source). If that input data is skewed by bots or bad samples, every downstream decision, from product development to marketing strategy, is at risk. Multi-million dollar launches of new products have floundered in the past due to faulty consumer insights, and bot-infested data only raises that risk.

Let’s look at how this challenge affects specific consumer sectors:

Consumer Packaged Goods (CPG)

CPG brands in household supplies and personal care rely heavily on surveys and panels to shape product development, packaging, and advertising. But when 30–40% of respondents are fake or inattentive, companies risk making decisions based on bad data, believing a product variation is a hit (or flop) when it’s not. The stakes are even higher with niche audiences, like “eco-conscious cleaners” or “natural beauty buyers,” where bots and fraudulent profiles often slip in. If even 100 out of 500 “real” responses are fake, a brand might misread preferences around scent, packaging, or pricing, leading to costly missteps. In a space driven by tight margins and fast-moving trends, tainted data can derail product launches and erode consumer trust.

Food & Beverage

In F&B, taste is everything, but bots can skew flavor research and product testing. One pizza chain’s survey showed a sudden spike in love for sardines, thanks to bots, not a real craving. Launching a product based on fake data (like a too-sweet drink or offbeat flavor) leads to wasted spend and consumer disconnect. Even worse, bots could distort surveys about dietary habits, leading to misguided health claims or labeling decisions. Fake reviews are also a problem: inflated ratings from bots can cause brands to overestimate success, while negative review attacks can unfairly tank new launches.

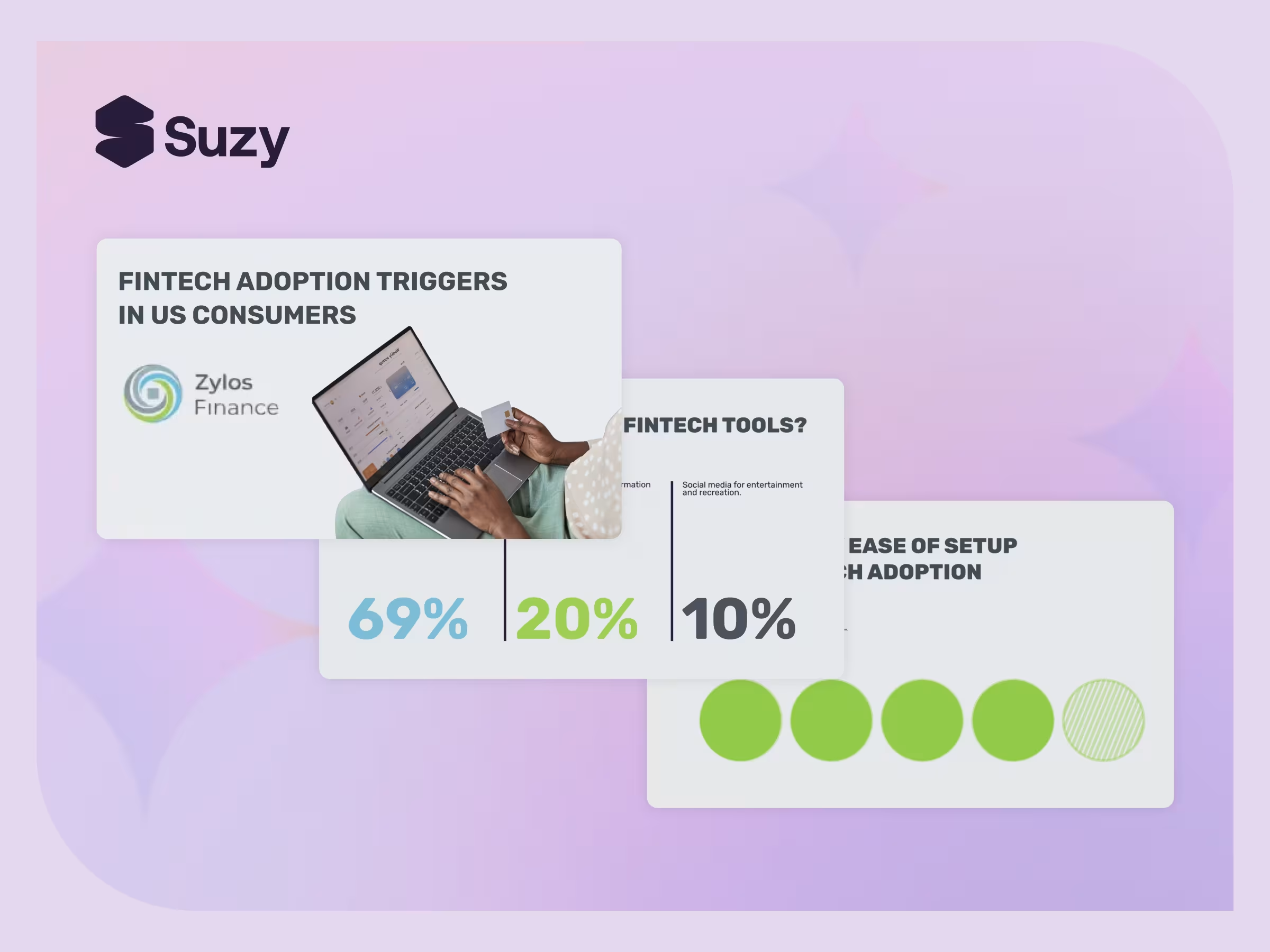

Consumer Finance

Banks, fintechs, and insurers use research to guide features and services, but bad data here is risky. Bots or duplicate profiles can skew findings, making it seem like customers want a certain perk (like crypto rewards or round-up savings) when they don’t. Fraudsters also target financial surveys for high incentives, making it harder to gather representative data across income or age groups. The result: fintechs may chase the wrong priorities and miss the mark on what customers really value, trust, fraud protection, or ease of use.

Consumer Technology

Tech brands engage users directly, but ironically, tech-savvy fraudsters and bots often infiltrate that feedback loop. A survey on a new gadget might get flooded with fake entries, pushing fringe features that real users don’t care about. Developers could end up prioritizing the wrong things, misallocating resources, or misunderstanding their user base. Fake reviews also distort sentiment on e-commerce sites and app stores, forcing teams to sift real feedback from bot-generated noise. For an industry built on iteration, bad input leads to bad output.

Other Consumer-Driven Sectors

Retail, travel, automotive, and even public policy research all face the same threat. A hotel may chase the wrong amenity upgrade, or a car brand may misjudge trust in self-driving features, all based on fake survey responses. One public health survey infamously suggested 39% of Americans gargled bleach, likely a result of mischievous or bot-driven responses. Across sectors, flawed data means flawed decisions: wasted marketing, misfired products, and customer strategies that feel out of touch. Bots and bad data are a systemic threat to “customer-first” thinking.

The Problem: Research Panel Bots Are Getting Smarter

It’s not just a few rogue responses anymore. Studies show up to 40% of survey responses from panel providers are low-quality, and in extreme cases, over 70% can be outright fraudulent. Research panel bots, automated scripts that impersonate real respondents, are sophisticated enough to dodge attention-checks, mimic human timing, and even pass open-ended traps.

These bots infiltrate surveys to claim incentives at scale, polluting datasets with junk responses that look valid but provide no real consumer signal. Worse, the rise of AI is enabling even real human respondents to lean on generative tools to answer surveys, creating response inflation and bias. Whether synthetic or AI-assisted, this new wave of behavior is eroding trust in traditional research panels.

What makes this even more concerning is that many data quality practices are still rooted in outdated, reactive approaches, like relying on speeding, straightlining, or gibberish filters to flag poor-quality responses after data collection has already happened. These checks often fail to catch today’s more advanced fraud and push the burden onto researchers to clean data manually, repurchase sample, or refield studies, wasting both time and budget.

How AI Is Amplifying the Challenge

The rise of advanced AI has been a double-edged sword for consumer research. On one hand, AI helps analyze data, but on the other, AI-powered bots are making fraudulent responses more sophisticated. Generative AI tools can craft human-like answers at scale, allowing fraud farms to fill out thousands of surveys with minimal effort. These responses are often surprisingly coherent and polished, easily dodging traditional red flags. For instance, AI can randomize answers and even add slight typos or variability to appear human, all while completing surveys in seconds.

Even real human participants are now sometimes using AI to cheat or expedite their survey responses. Recent research from Stanford and NYU found that nearly one-third of online survey takers admitted to using AI (like ChatGPT) to help answer open-ended questions. These AI-assisted answers tend to be longer, more formulaic, and unnaturally polite – lacking the typical quirks or emotion genuine answers have. The worry is that widespread AI usage could homogenize responses, diluting the diversity of opinions. If many respondents (or bots) use the same AI models to generate answers, surveys might start reflecting the AI’s “average” answer rather than true consumer sentiment.

Meanwhile, most traditional quality control systems were never designed to catch this level of sophistication. Speeding and straightlining checks are too shallow to detect AI-generated or fraud-coordinated responses that mimic human behavior. Relying solely on such checks is akin to using a thermometer to predict a storm, it reacts to problems after they’ve already done damage.

Instead of post-hoc detection, proactive and multi-layered prevention, like that used by Biotic, which monitors behavior throughout the respondent lifecycle, is becoming critical. Without this shift, even sophisticated-looking datasets may be deeply flawed, and the cost of cleaning up that mess continues to fall squarely on researchers and brands.

Enter Biotic: Suzy’s Real-Time Fraud Prevention Engine

Biotic takes a fundamentally different approach than traditional (and now outdated) quality measures. Built by Suzy to evolve alongside the very AI tools enabling survey fraud, Biotic is a proactive defense system that stops bad data before it ever enters your research.

Traditional methods like speeding or straightlining checks only flag poor responses after the damage is done. Biotic flips that model. And Suzy is able to deploy Biotic across all of our panel supply sources, providing a layer of defense across the entire research lifecycle. Biotic can identify how individual and collective respondent behavior changes over time, making it possible to spot anomalies and evolving patterns that more siloed systems would miss. This ecosystem wide view allows Biotic to intervene before a fraudulent respondent even reaches a survey.

For our proprietary panel, at participant sign-up, Biotic deploys a rigorous, multi-layered screening process to stop fraud before it starts. Every new participant is vetted through IP and email domain blocking to exclude known bad actors and suspicious domains like disposable or temporary addresses. Email verification ensures the account is valid and in use, while double verification through SMS adds another layer of identity confirmation. Device fingerprinting tools like Verisoul flag spoofed or previously banned devices, and CAPTCHA tests, combined with Azure Vision AI photo verification, help confirm a real human is behind the screen. Onboarding questions are also used to gauge attention and reinforce respondent expectations. Only users who pass all of these checks are allowed into the research ecosystem, ensuring that fraud is filtered out before any data is collected.

For all of our panel supply sources, once a respondent begins a survey, Biotic continues to guard data quality with real-time monitoring. It analyzes behavioral patterns against historical benchmarks to catch signals of fraud or disengagement as they happen. This includes tracking for speeding, straightlining, and inconsistent or irrelevant open-ended responses. Biotic also monitors email and IP activity for signs of VPN usage or profile manipulation mid-study. Suzy’s data quality team reviews flagged open-ends in real time to ensure that responses entering the dataset are relevant, unique, and authentically human. This tight loop of automation plus human oversight gives Biotic the agility to intercept suspicious activity as it unfolds—not after the fact.

Between surveys, Biotic remains active behind the scenes. It tracks longitudinal behavior across the panel, identifying unusual incentive redemption patterns like rapid cash-outs that may indicate bot activity or fraud farms. It also strategically inserts trap questions designed to be easy for humans but difficult for inattentive users or bots to answer correctly. These passive and active defenses ensure that fraud is detected even in quieter periods, enabling Biotic to maintain a continuously high standard of respondent quality across all research engagements.

When Biotic detects suspicious behavior, such as failed trap questions or fraud-like response patterns, the respondent isn’t immediately removed. Instead, they’re routed into a “honeypot,” a silent sandbox environment where they no longer impact real research. From their perspective, everything appears normal, but they’re actually engaging in controlled studies designed to help Biotic learn from their tactics. This stealthy redirection gives Biotic a major advantage: bad actors don’t know they’ve been flagged, so they can’t adapt their behavior in response. Meanwhile, Biotic evolves in real time, using machine learning and new fraud signals, like recent innovations in detecting fuzzy duplicate answers, to stay ahead of even the most sophisticated threats.

How Researchers Can Fight Back

Research panel bots may be evolving fast, but that doesn’t mean researchers are powerless. There are clear, actionable steps you can take to safeguard your insights and outpace bad actors.

First and foremost, adopt a multi-layered fraud prevention system, not just detection after the fact. Solutions like Suzy’s Biotic proactively screen out bad data across the entire respondent journey, from onboarding to incentive redemption, minimizing the risk of contamination before it even begins. A single check for speeding or straightlining isn’t enough anymore; your defense needs to be continuous, adaptive, and AI-aware.

Second, be diligent in choosing your partners. Push your panel and research providers to be transparent about their quality assurance processes. Don’t settle for vague claims like “we monitor for fraud.” Instead, ask pointed questions:

- How are you preventing—not just detecting—fraudulent responses in real time?

- What new fraud behaviors have you identified recently, and how has your system adapted?

- Do you rely solely on automation, or is human oversight involved in data validation?

- How do you ensure data remains clean when blending third-party panels with proprietary ones?

- If data quality issues are found, do you offer resampling at no extra cost?

Lastly, don’t treat data cleaning as an afterthought. The cost of letting low-quality responses slip through isn’t just monetary—it can lead to misguided strategies, product failures, and reputational damage. By investing in preventative infrastructure and asking the right questions, you can protect your insights, your brand, and your bottom line.

Fighting bots requires vigilance, evolution, and the right partners. But with the right approach, researchers can reclaim control—and confidence—in the data that drives everything.

Ready to Take Control of Your Data?

Don’t wait for bad data to derail your next big decision. If you’re serious about protecting your research from bots, fraud, and AI-generated noise, it’s time to upgrade from reactive data cleaning to proactive prevention.

Learn how Biotic can safeguard your insights from start to finish, before fraud ever enters the equation.

👉 Talk to our team to see Biotic in action and explore how Suzy can elevate the quality of your consumer research.

Because clean data isn’t a nice-to-have, it’s your competitive edge.

.webp)

.avif)